Playing with VXLANs and Proxmox VE

Introduction🔗

With the SDN (software defined network) feature of Proxmox VE it is a lot easier to set up advanced networks. One such technology is VXLAN. In short, it spans a layer-2 network across different nodes. In the networking world, the endpoints of a VXLAN are known as VTEPs (VXLAN Tunnel Endpoints).

The traffic between the VTEPs is transported in UDP packets. We need a network that connects the endpoints, the so-called underlay network.

The underlay network really depends on the circumstances. In a datacenter, a spine-leaf architecture is the current buzz on how to setup a full-mesh network for good redundancy and load balancing.

╭ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ╮

Underlay network

│ ┏━━━━━━━━━━━━━━━━━━━━━━━━━┓ │

─ ─ ┬ ─┃─ ─ ─ ─ ─ ─ ┬ ─ ─ ─ ─ ─ ─┃─┬─ ─ ─

│ ┃ │ ┃ │

╔════╧═════╗ ╔════╧═════╗ ╔════╧═════╗

║ VTEP 1 ║ ║ VTEP 2 ║ ║ VTEP 3 ║

╟───────┰──╢ ╟──────────╢ ╟──┰───────╢

║ ┃ ║ ║ ║ ║ ┃ ║

╟───────●──╢ ╟──────────╢ ╟──▼───────╢

║ Client A ║ ║ Client B ║ ║ Client C ║

╚══════════╝ ╚══════════╝ ╚══════════╝Scenario🔗

Since we can define the VXLAN peers freely in the SDN configuration, why not connect two separate Proxmox VE instances? A common setting in a homelab like environment could be to have one Proxmox VE instance (or multiple nodes clustered) locally, and another separate Proxomx VE instance running in a datacenter somewhere.

╭────────────╮ ╭────────────╮

│ PVE local │ underlay │ PVE DC │

├────────────┤ network ├────────────┤

│ Peer IP A ├──────────┤ Peer IP B │

├────────────┤ ├────────────┤

│ VXLAN 6000 │ │ VXLAN 6000 │

╰─┬────────┬─╯ ╰─┬────────┬─╯

│ VM 1 │ │ VM 2 │

╰────────╯ ╰────────╯The VXLAN traffic itself isn't encrypted. Therefore, if you plan to use it over public connections, you will need another layer. In a more corporate environment, this could be a site-to-site VPN. For our more homelabby situation, I am using a Wireguard connection (based on Tailscale) to connect the two Proxmox VE instances.

Keep in mind, that there isn't much redundancy in the underlay network here!

Configuration🔗

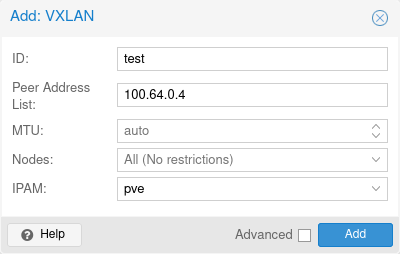

Once we have the underlay network up and running, we can configure the SDN on both sides. On the first node, in the web UI, go to Datacenter → SDN → Zones and Add a VXLAN zone.

Since we connect only two hosts, the Peer Address List contains only one address. If we had more machines, this would be a list — comma or space separated.

Next we need a VNET which we can create at Datacenter → SDN → VNets:

Do the same two steps on the second node with the correct list of remote peers. The last step is to Apply these settings on both nodes. In the main Datacenter → SDN panel, you can do just that.

First tests🔗

Let's create a guest on each Proxmox VE host with their NIC placed in the vxlan1 bridge that should now be available. We also need to manually configure the IPs as we don't have any DHCP server running in that network.

In the end, my test scenario looks like this:

╭────────────╮ ╭────────────╮

│ PVE local │ underlay │ PVE DC │

├────────────┤ network ├────────────┤

│ VTEP IP A ├──────────┤ VTEP IP B │

├────────────┤ ├────────────┤

│ VXLAN 6000 │ │ VXLAN 6000 │

├────────────┤ ├────────────┤

│ VM 1 │ │ VM 2 │

│10.60.0.1/24│ │10.60.0.2/24│

╰────────────╯ ╰────────────╯

Let's see if a ping between both works…

root@vm1:~# ping -c4 10.60.0.2

PING 10.60.0.2 (10.60.0.2) 56(84) bytes of data.

64 bytes from 10.60.0.2: icmp_seq=1 ttl=64 time=27.6 ms

64 bytes from 10.60.0.2: icmp_seq=2 ttl=64 time=23.0 ms

64 bytes from 10.60.0.2: icmp_seq=3 ttl=64 time=23.5 ms

64 bytes from 10.60.0.2: icmp_seq=4 ttl=64 time=23.3 ms

root@vm2:~# ping -c4 10.60.0.1

PING 10.60.0.1 (10.60.0.1) 56(84) bytes of data.

64 bytes from 10.60.0.1: icmp_seq=1 ttl=64 time=22.7 ms

64 bytes from 10.60.0.1: icmp_seq=2 ttl=64 time=22.8 ms

64 bytes from 10.60.0.1: icmp_seq=3 ttl=64 time=23.0 ms

64 bytes from 10.60.0.1: icmp_seq=4 ttl=64 time=24.9 ms

We can see that they can communicate with each other. The latency is definitely higher than what one would expect if the ping would just be within the local network.

Route to and from VXLAN🔗

Now we can add a bit of routing to the mix. VXLAN in Proxmox VE does not support exit nodes at the time of writing this (Proxmox VE 8.2.7). We can use a VM to act as router between the VXLAN network and a physical one.

By adding a second virtual NIC to VM 1 and configuring it as the default gateway for VM 2, we can end up with a setup like this:

╭────────────╮ ╭────────────╮

│ PVE local │ │ PVE DC │

├────────────┤ ├────────────┤

╭────────────╮ │ VM 1 │ VXLAN │ VM 2 │

│ Local PC │ LAN │10.60.0.1/24├───────┤10.60.0.2/24│

│10.0.0.30/24├─────┤10.0.0.20/24│ ╰────────────╯

╰────────────╯ ╰────────────╯On VM 1 we need to enable IPv4 forwarding for it to become a router.

root@vm1:~# echo 1 /proc/sys/net/ipv4/ip_forward

On the LAN, we need to make sure that routes to the 10.60.0.0/24 network are routed correctly.

This can be achieved by defining an explicit route in the firewall/router/gateway.

Alternatively, for testing purposes, we can also add it as a route directly to our local PC.

Assuming the local PC is a Linux machine:

user@local-pc:~$ sudo ip route add 10.60.0.0/24 via 10.0.0.20

And with that explicit route and IP forwarding enabled in VM 1, we can try to ping VM 2 from local PC.

user@local-pc:~$ $ ping -c 4 10.60.0.2

PING 10.60.0.2 (10.60.0.2) 56(84) bytes of data.

64 bytes from 10.60.0.2: icmp_seq=1 ttl=63 time=52.7 ms

64 bytes from 10.60.0.2: icmp_seq=2 ttl=63 time=74.8 ms

64 bytes from 10.60.0.2: icmp_seq=3 ttl=63 time=24.5 ms

64 bytes from 10.60.0.2: icmp_seq=4 ttl=63 time=26.3 ms

We can also do it the other way around, VM 2 → local PC

root@vm2:~# ping -c 4 10.0.0.30

PING 10.0.0.30 (10.0.0.30) 56(84) bytes of data.

64 bytes from 10.0.0.30: icmp_seq=1 ttl=63 time=27.6 ms

64 bytes from 10.0.0.30: icmp_seq=2 ttl=63 time=25.8 ms

64 bytes from 10.0.0.30: icmp_seq=3 ttl=63 time=76.4 ms

64 bytes from 10.0.0.30: icmp_seq=4 ttl=63 time=26.9 ms

Nice! We have connected our VXLAN to the local network.

Conclusion🔗

We have our small VXLAN setup finished and can now run a layer-2 network that spans across our local Proxmox VE instance and the remote one.

By giving one VM another virtual NIC that connects to the physical network in one location, we can use it as a router to provide egress and ingress traffic into our VXLAN network. This router VM could also do NAT and ingress port forwards instead of full on routing.

Will this scale for very big environments? Probably not, because VXLAN is really just a basic layer-2 network. That means that all the broadcast traffic will be visible to all.

For large enviroments it is useful to add a control plane that handles information, like which VTEP has which MAC addresses behind it, in a more efficient way. This is known as EVPN and requires a bit more infrastructure.